The traditional use of LLMs in development often leads to outdated documentation, non-working code examples, version-incompatible solutions, and time wasted on verification, resulting in frustrating development experiences. As developers, we've all been there, spending countless hours verifying AI-generated solutions, only to find they don't quite work with our specific version or setup. This guide explores how Context7, a powerful open source documentation-aware tool, can improve Odoo development. Context7 enhances AI tools by pulling current, version-specific documentation from your source code, enabling accurate responses tailored to your tech stack. This means no more wrestling with outdated documentation or incompatible code examples.

In this guide, we'll explore how to use Context7 as an MCP server to improve your Odoo development process. From understanding the underlying technologies to practical implementation, you'll learn how to set up and utilize Context7 to create a more efficient and reliable development workflow. Whether you're building custom modules, debugging existing code, or exploring Odoo's extensive feature set, Context7 will become your indispensable AI-powered development companion.

Context7 as an MCP server

LLMs (e.g., ChatGPT, Claude, Gemini) are AI models trained on vast amounts of text data, capable of understanding and generating human-like text. In coding contexts, they can assist with code generation, debugging, and documentation interpretation. However, traditional LLMs face limitations when dealing with specific, version-dependent documentation or recent code updates, as they're trained on historical data that may be outdated.

The Model-Context Protocol (MCP) server is a standardized interface that enables LLMs (or other AI models) to interact with various tools and external systems within a unified framework. It defines a structured communication protocol that allows models to access contextual information, execute functions, and interact with external services while maintaining a consistent API regardless of the underlying model implementation. MCP servers enable LLMs to securely access external tools and data sources, such as databases and filesystems (e.g., PostgreSQL, Google Drive), browsers automation and web search (e.g., Puppeteer), communication and productivity applications (e.g., Slack, Google Maps), and many more.

Context7 addresses one of the key limitations of traditional LLMs - their reliance on potentially outdated training data. It functions as a specialized MCP server that pulls current, version-specific documentation directly from source repositories. This ensures that when developers interact with AI tools, they receive accurate, relevant information that matches their exact development environment.

These three technologies work together to create a powerful development assistant. The LLM provides the basic language capabilities for understanding and generating code, the MCP protocol enables real-time integration of multiple information sources, and Context7 ensures that all documentation and examples are current and relevant.

Setting Up Context7 for Visual Studio Code

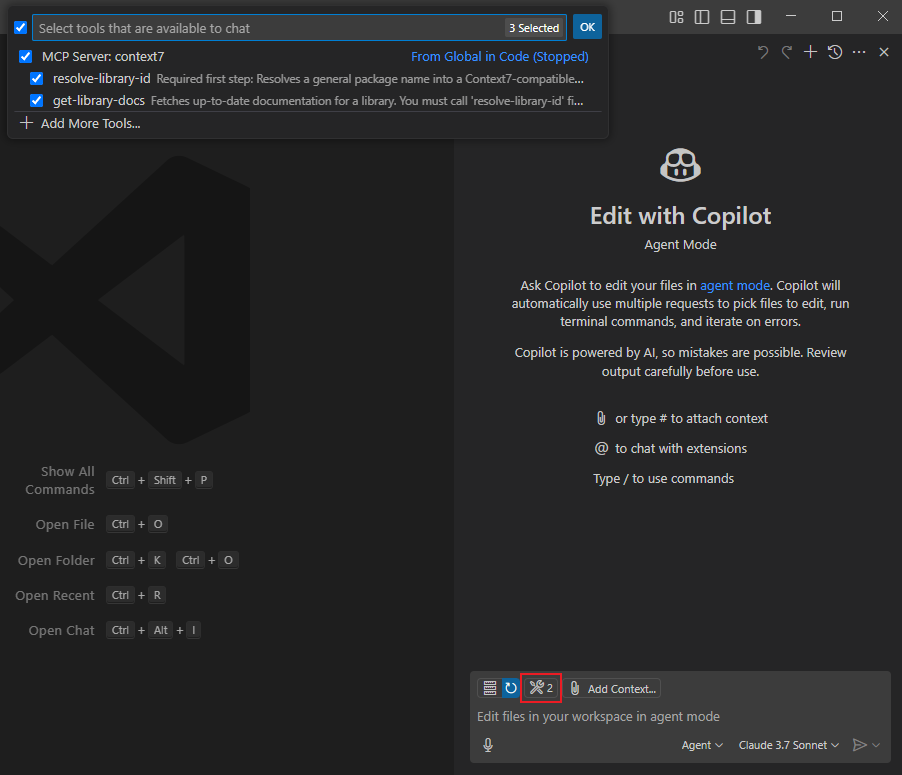

In this tutorial we will add Context7 as an MCP server in Visual Studio Code together with Github Copilot, but the procedure is similar for other IDE's (e.g., Cursor, Windsurf). First you have to enable the 'agent mode' by configuring the chat.agent.enabled setting in the Settings editor.

To use Context7 as a MCP server across all projects, simply add the server configuration to your VS Code user settings in the settings.json.

// settings.json

{

"mcp": {

"servers": {

"context7": {

"command": "npx",

"args": [

"-y",

"@upstash/context7-mcp"

]

}

}

}

}

Now you can use Context7 in Agent mode.

Note

For more information about all MCP settings in Visual Studio Code or the MCP server settings for Context7, check out the links below:

Context7 in Odoo Development

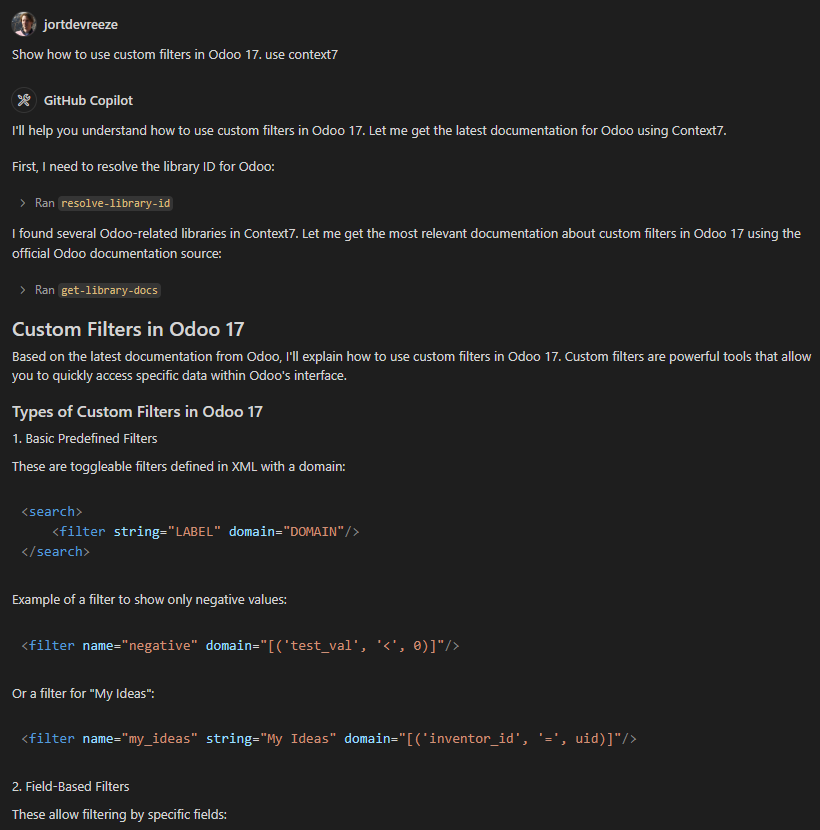

Now that we have setup Context7 in Visual Studio Code, all you have to do is add use context7 to your prompt. Your prompt will now use Context7 to fetch up-to-date code examples and documentation into your LLM's context. You can for example ask questions about how something works.

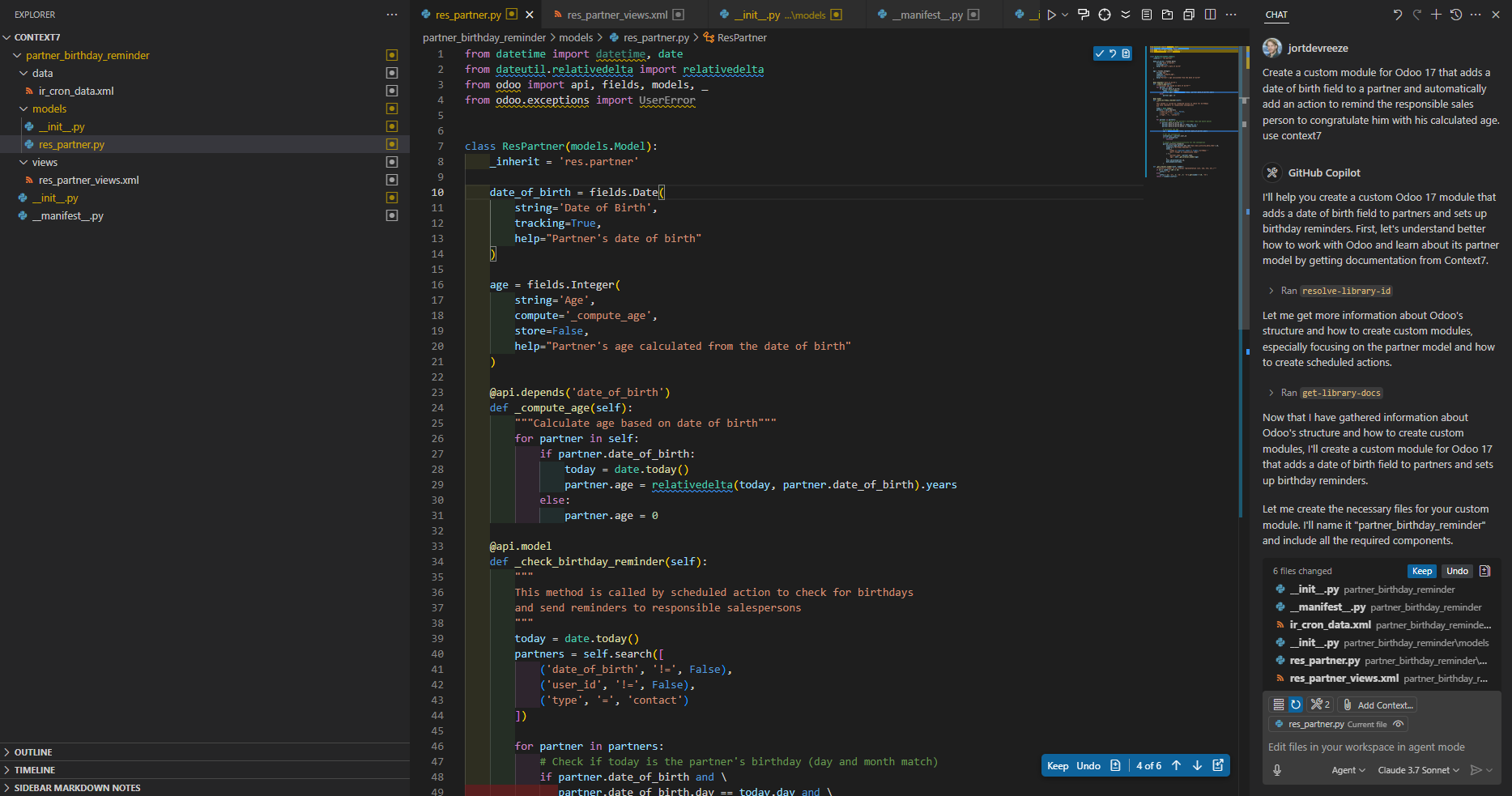

Or you can let Copilot start building a custom module. I want, for example, a module that adds a date of birth field to a partner and automatically add an action to remind the responsible sales person to congratulate him.

Using Context7 the prompt created all the files and build the module using the Odoo 17 documentation as a reference. While Context7 significantly improves AI assistance by providing current, version-specific documentation, it's important to remember that like any tool, it's not infallible and results should still be verified. However, compared to traditional LLM approaches, the accuracy and relevance of suggestions are dramatically improved. Although we've focused on Odoo development in this guide, the same powerful Context7 integration can enhance development in any framework or language, from Flutter mobile apps to React web applications, making it a versatile addition to any developer's toolkit.